Introduction¶

Think of this as a bit of a primer. In this page, I’m aiming to cover why exactly we built these extensions, alongside how a brief, high level overview of how everything currently works.

Why?¶

This project was initially born out of a question asked during a demonstration of an augmented reality application that we’d built. This question was simply, “How can we make this accessible - What features does Unity have for accessibility?”. This was something I had no idea about, and as such, proceeded to dive into and research.

What I found, was that Unity did nothing in the way to work with Native accessibility APIs on a users device. And whilst there were projects such as UAP, it only offered options to make the UI accessible, and not much else. This then led me to question and explore how we could make augmented reality accessible, and what could be done with Unity to enable it to hook into native, on-device APIs for Text-To-Speech etc. and create an inclusive experience.

Being a person that is Visually Impaired, my key focus has been building out the extensions to enable those with visual impairments to access Augmented and Virtual Reality, alongside existing Unity 3D games and applications. However, there is no reason why these extensions could not be built out to be inclusive of other disabilities. From the outside, Unity might appear like a tool that is nigh on impossible to make accessible. However, it’s versatile nature, and ability to interact with user created native code, make it suprisingly extensible.

What are others doing on this front?¶

Over the course of development, I’ve seen efforts from research teams at Microsoft to make VR accessible. However, these appear to tie-in to the Graphics Rendering Stack/DirectX API on Windows, to provide a one-size-fits-all solution that requires no developer input or modification of the code. The team are also building out extensions for Unity that tie into these low-level tools, to provide additional levels of interaction.

However, this has been one of the few examples I’ve seen when it comes to making mixed reality and Unity applications accessible. In a brief study of some of most popular AR applications, such as Pokémon Go, IKEA Place, and Google’s Measure AR, most were lacking in any kind of accomidations or modifications for those with specialised requirements such as text-to-speech, descriptions of objects, larger text etc. Upon testing these apps out, and exploring how they worked with services like TalkBack on, this revealed a large issue to me, Nobody was making AR/VR apps accessible!

How?¶

I’ll be saving a deep dive into the technologies and techniques behind the extension later on in this documentation, but here’s a high level overview to whet your appetite for the time being.

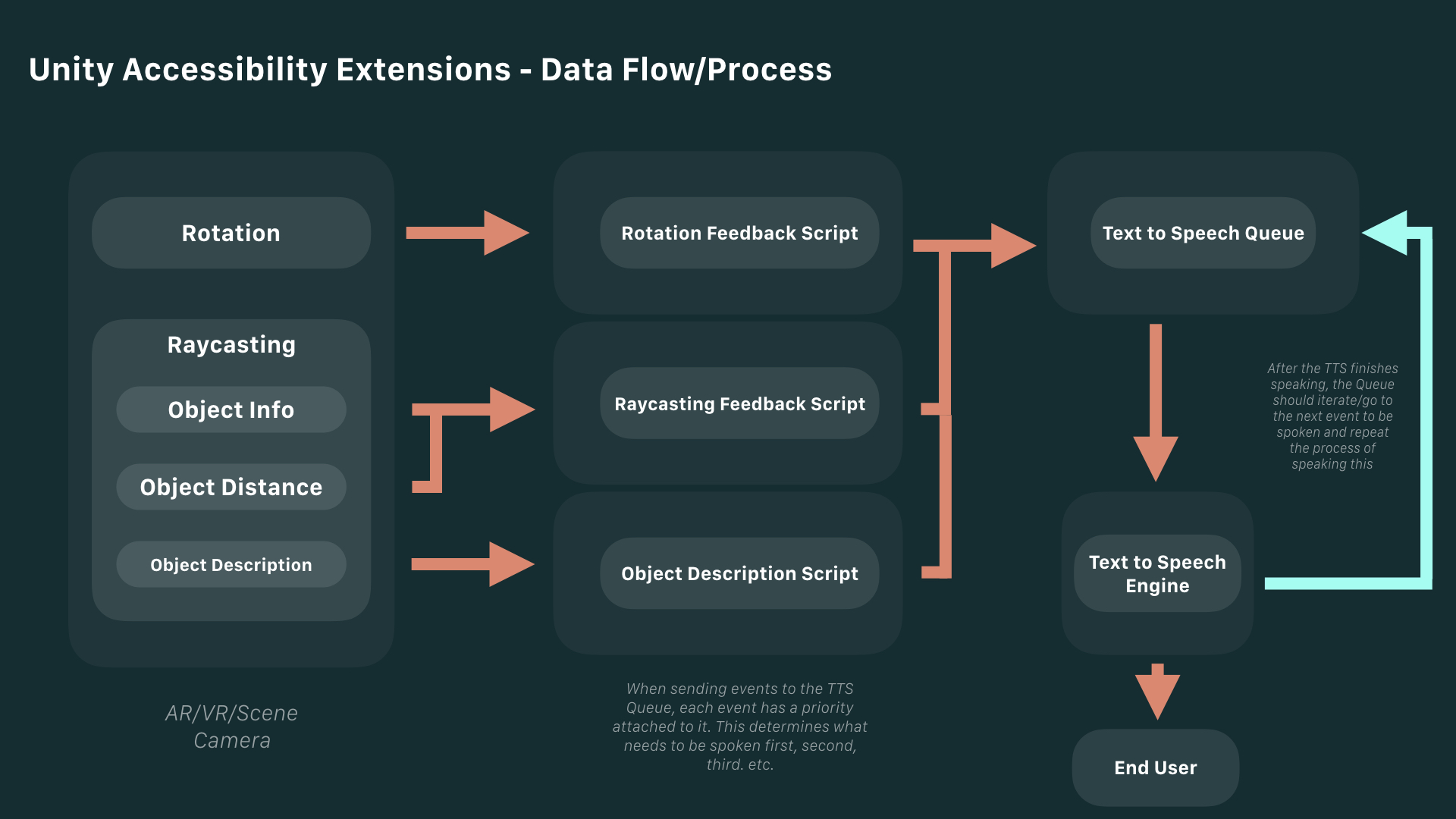

We use Raycasting to provide object detection, distance estimation. Once an object has been hit by a ray, we pull several bits of data from it, including it’s name, a description (via a custom component that allows a developer to include a long string.), and it’s distance. We feed these in, alongside camera rotation data (as it’s safe to assume that in AR and VR, the camera in the scene is located in roughly the same position or perspective that the users head or viewpoint will be), into a script to be parsed and turned into fully descriptive strings (such as, “The object is 1.5m away from you, double tap to hear the description attached to it”), which get fed into a script that handles passing over the data to native code which taps into the Text-to-Speech Engines on both Android and iOS.

Currently, as of writing (16th May) - I’m exploring and creating a queuing system for information passed to the TTS, so that a developer, user can choose which event they want to be spoken first, and also to ensure that the TTS isn’t flooded with requests to handle rotation, object description etc. all at the same time.

A flow chart outlining the flow of data/information from the camera in the scene, to it’s endpoint, the text to speech engine.